Courtney Paquette (McGill)

Modern machine learning (ML) applications grapple with the challenges posed by high-dimensional datasets and high-dimensional parameter spaces. Stochastic gradient descent (SGD) and its variants have emerged as the go-to algorithms for optimizing in this expansive space. However, the classical analysis of algorithms, rooted in low-dimensional geometry, often leads to misconceptions in the realm of modern ML.

In this talk, we delve into the intricacies of high-dimensional phenomena in machine learning optimization landscapes. The talk begins with an exploration of typical stochastic algorithms and emphasizes the crucial role that high-dimensionality plays in shaping their behavior.

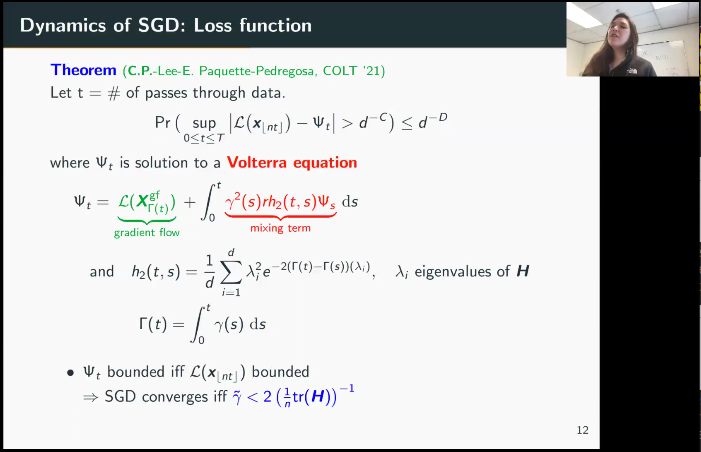

Drawing on tools from high-dimensional probability, stochastic differential equations (SDEs), random matrix theory, and optimization, we present a framework for analyzing the dynamics of SGD in scenarios where both the number of samples and parameters are large. The resulting limiting dynamics are governed by an ordinary differential equation (ODE), providing valuable insights into algorithmic choices such as hyper-parameters. We demonstrate the applicability of this framework on real datasets, highlighting its alignment with actual performance.

Bio: Courtney Paquette is an assistant professor at McGill University and a CIFAR Canada AI chair, MILA. Paquette’s research broadly focuses on designing and analyzing algorithms for large-scale optimization problems, motivated by applications in data science. She received her PhD from the mathematics department at the University of Washington (2017), held postdoctoral positions at Lehigh University (2017-2018) and University of Waterloo (NSF postdoctoral fellowship, 2018-2019), and was a research scientist at Google Research, Brain Montreal (2019-2020).